Introduction to Speech Recognition, Speech to text APIs and Benchmarking

What is Speech Recognition?

It is an interdisciplinary subfield of computer science and computational linguistics that develops methodologies and technologies that enable the recognition and translation of spoken language into text by computers. It is also known as automatic speech recognition (ASR), computer speech recognition, or speech to text (STT). It incorporates knowledge and research in the computer science, linguistics, and computer engineering fields. While it’s commonly confused with voice recognition, speech recognition focuses on the translation of speech from a verbal format to a text one whereas voice recognition just seeks to identify an individual user’s voice.

Key Features of Effective Speech Recognition

Many speech recognition applications and devices are available, but the more advanced solutions use AI and machine learning. They integrate grammar, syntax, structure, and composition of audio and voice signals to understand and process human speech. Ideally, they learn as they go — evolving responses with each interaction. The following are some of the key features.

Streaming speech recognition: Receive real-time speech recognition results as the model processes the audio input streamed from your application’s microphone or sent from a pre-recorded audio file.

Speech adaptation: Customize speech recognition to transcribe domain-specific terms and rare words by providing hints and boost your transcription accuracy of specific words or phrases. Automatically convert spoken numbers into addresses, years, currencies, and more using classes.

Multichannel recognition: Speech-to-Text can recognize distinct channels in multichannel situations (e.g., phone calls, video conferences, online interviews, etc.) and annotate the transcripts to preserve the order.

Content filtering: Profanity filter helps you detect inappropriate or unprofessional content in your audio data and filter out profane words in text results.

Automatic punctuation: Speech-to-Text accurately punctuates transcriptions (e.g., commas, question marks, and periods).

Speaker diarization: Know who said what by receiving automatic predictions about which of the speakers in a conversation spoke each utterance.

Speech Recognition Use Cases

A wide number of industries are utilizing different applications of speech technology today, helping businesses and consumers save time and even lives. The following are some examples.

Automotive: Speech recognizers improve driver safety by enabling voice-activated navigation systems and search capabilities in car radios and infotainment systems.

Technology: Virtual assistants are increasingly becoming integrated within our daily lives, particularly on our mobile devices. We use voice commands to access them through our smartphones, such as through Google Assistant or Apple’s Siri, for tasks, such as voice search, or through our speakers, via Amazon’s Alexa or Microsoft’s Cortana, to play music.

Healthcare: Doctors and nurses leverage dictation applications to capture and log patient diagnoses and treatment notes.

Sales: It can help a call center transcribe thousands of phone calls between customers and agents to identify common call patterns and issues. Cognitive bots can also talk to people via a webpage, answering common queries and solving basic requests without needing to wait for a contact center agent to be available.

Security: As technology integrates into our daily lives, security protocols are an increasing priority. Voice-based authentication adds a viable level of security.

Speech Recognition Algorithms

Speech recognizers are made up of a few components, such as the speech input, feature extraction, feature vectors, a decoder, and a word output. The decoder leverages acoustic models, a pronunciation dictionary, and language models to determine the appropriate output. Speech recognition technology is evaluated on its accuracy rate, i.e., word error rate (WER), and speed. Several factors can impact word error rate, such as pronunciation, accent, pitch, volume, and background noise. Reaching human parity — meaning an error rate on par with that of two humans speaking — has long been the goal of speech recognition systems. Various algorithms and computation techniques are used to recognize speech into text and improve the accuracy of the transcription. Below are brief explanations of some of the most commonly used methods.

Hidden Markov models: Modern general-purpose speech recognition systems are based on Hidden Markov Models. These are statistical models that output a sequence of symbols or quantities. HMMs are used in speech recognition because a speech signal can be viewed as a piecewise stationary signal or a short-time stationary signal. In a short time scale (e.g., 10 milliseconds), speech can be approximated as a stationary process. Speech can be thought of as a Markov model for many stochastic purposes. While a Markov chain model is useful for observable events, such as text inputs, hidden markov models allow us to incorporate hidden events, such as part-of-speech tags, into a probabilistic model. They are utilized as sequence models within speech recognition, assigning labels to each unit — i.e., words, syllables, sentences, etc. — in the sequence. These labels create a mapping with the provided input, allowing it to determine the most appropriate label sequence.

Dynamic time warping (DTW): Dynamic time warping is an approach that was historically used for speech recognition but has now largely been displaced by the more successful HMM-based approach. Dynamic time warping is an algorithm for measuring similarity between two sequences that may vary in time or speed. A well-known application has been automatic speech recognition, to cope with different speaking speeds. In general, it is a method that allows a computer to find an optimal match between two given sequences (e.g., time series) with certain restrictions. That is, the sequences are “warped” non-linearly to match each other.

Neural networks: Neural networks emerged as an attractive acoustic modeling approach in ASR in the late 1980s. Since then, neural networks have been used in many aspects of speech recognition such as phoneme classification, phoneme classification through multi-objective evolutionary algorithms, isolated word recognition, audio-visual speech recognition, audio-visual speaker recognition, and speaker adaptation. Neural networks make fewer explicit assumptions about feature statistical properties than HMMs and have several qualities making them attractive recognition models for speech recognition. When used to estimate the probabilities of a speech feature segment, neural networks allow discriminative training naturally and efficiently. However, despite their effectiveness in classifying short-time units such as individual phonemes and isolated words, early neural networks were rarely successful for continuous recognition tasks because of their limited ability to model temporal dependencies. One approach to this limitation was to use neural networks as a pre-processing, feature transformation, or dimensionality reduction, step before HMM-based recognition. However, more recently, LSTM and related recurrent neural networks (RNNs) and Time Delay Neural Networks (TDNN’s) have demonstrated improved performance in this area.

Deep feedforward and recurrent neural networks: A deep feedforward neural network (DNN) is an artificial neural network with multiple hidden layers of units between the input and output layers. DNN architectures generate compositional models, where extra layers enable the composition of features from lower layers, giving a huge learning capacity and thus the potential of modeling complex patterns of speech data. One fundamental principle of deep learning is to do away with hand-crafted feature engineering and to use raw features. This principle was first explored successfully in the architecture of deep autoencoder on the “raw” spectrogram or linear filter-bank features, showing its superiority over the Mel-Cepstral features which contain a few stages of fixed transformation from spectrograms. The true “raw” features of speech, waveforms, have more recently been shown to produce excellent larger-scale speech recognition results.

End-to-end automatic speech recognition: Since 2014, there has been much research interest in “end-to-end” ASR. Traditional phonetic-based (i.e., all HMM-based model) approaches required separate components and training for the pronunciation, acoustic, and language model. End-to-end models jointly learn all the components of the speech recognizer. This is valuable since it simplifies the training process and deployment process. The first attempt at end-to-end ASR was with Connectionist Temporal Classification (CTC)-based systems introduced by Alex Graves of Google DeepMind and Navdeep Jaitly of the University of Toronto in 2014. The model consisted of recurrent neural networks and a CTC layer. Jointly, the RNN-CTC model learns the pronunciation and acoustic model together, however, it is incapable of learning the language due to conditional independence assumptions similar to an HMM. Consequently, CTC models can directly learn to map speech acoustics to English characters, but the models make many common spelling mistakes and must rely on a separate language model to clean up the transcripts. A large-scale CNN-RNN-CTC architecture was presented in 2018 by Google DeepMind achieving 6 times better performance than human experts. An alternative approach to CTC-based models is attention-based models. The model named “Listen, Attend and Spell” (LAS), literally “listens” to the acoustic signal, pays “attention” to different parts of the signal, and “spells” out the transcript one character at a time. Unlike CTC-based models, attention-based models do not have conditional-independence assumptions and can learn all the components of a speech recognizer including the pronunciation, acoustic, and language model directly. This means, during deployment, there is no need to carry around a language model making it very practical for applications with limited memory.

Speech Recognition Architecture

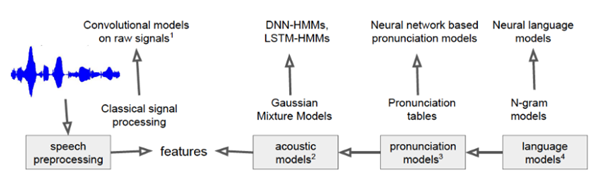

Deep Neural Network Architecture for Speech Recognition

Over time, researchers have noticed that each of the traditional components in the above figure could work more effectively if we used neural networks. Instead of the N-gram language models, we can build neural language models and feed them into a speech recognition system to restore things that were produced by a first path speech recognition system. Looking into the pronunciation models, we can figure out how to do pronunciation for a new sequence of characters that we’ve never seen before using a neural network. For acoustic models, we can build deep neural networks (such as LSTM or transformer based models) to get much better classification accuracy scores of the features for the current frame. Even the speech pre-processing steps were found to be replaceable with convolutional neural networks on raw speech signals.

These so-called end-to-end models encompass more and more components in the pipeline discussed above. The 2 most popular ones are (1) Connectionist Temporal Classification (CTC), which is in wide usage these days at Baidu and Google, but it requires a lot of training; and (2) Sequence-To-Sequence (Seq-2-Seq), which doesn’t require manual customization.

Some Open-Source Trained Speech Recognition Models

The following custom models are some of the state-of-the-art models built from the scratch by companies like Baidu AI, Facebook AI Research, etc.

Deep Speech 1: Scaling up end-to-end Speech Recognition

The authors of this paper are from Baidu Research’s Silicon Valley AI Lab. Deep Speech 1 doesn’t require a phoneme dictionary, but it uses a well-optimized RNN training system that employs multiple GPUs. The model achieves a 16% error on the Switchboard 2000 Hub5 dataset. GPUs are used because the model is trained using thousands of hours of data. The model has also been built to effectively handle noisy environments. The major building block of Deep Speech is a recurrent neural network that has been trained to ingest speech spectrograms and generate English text transcriptions. The purpose of the RNN is to convert an input sequence into a sequence of character probabilities for the transcription. The RNN has five layers of hidden units, with the first three layers not being recurrent. At each time step, the non-recurrent layers work on independent data. The fourth layer is a bi-directional recurrent layer with two sets of hidden units. One set has forward recurrence while the other has a backward recurrence. After prediction, Connectionist Temporal Classification (CTC) loss is computed to measure the prediction error. Training is done using Nesterov’s Accelerated gradient method.

Deep Speech 2: End-to-End Speech Recognition in English and Mandarin

In the second iteration of Deep Speech, the authors use an end-to-end deep learning method to recognize Mandarin Chinese and English speech. The proposed model can handle different languages and accents, as well as noisy environments. The authors use high-performance computing (HPC) techniques to achieve a 7x speed increment from their previous model. In their data center, they implement Batch Dispatch with GPUs. The English speech system is trained on 11,940 hours of speech, while the Mandarin system is trained on 9,400 hours. During training, the authors use data synthesis to augment the data. The architecture used in this model has up to 11 layers made up of bidirectional recurrent layers and convolutional layers. The computation power of this model is 8x faster than that of Deep Speech 1. The authors use Batch Normalization for optimization. For the activation function, they use the clipped rectified linear (ReLU) function.

Wav2Vec: Unsupervised Pre-training for Speech Recognition

Authors from Facebook AI Research explore unsupervised pre-training for speech recognition by learning representations of raw audio. The result is Wav2Vec, a model that’s trained on a huge unlabeled audio dataset. The representations obtained from this are then used to improve acoustic model training. A simple multi-layer convolutional neural network is pre-trained and optimized through a noise contrastive binary classification task. Wav2Vec achieves a 2.43% WER on the nov92 test set. The approach used in pre-training is optimizing the model to predict future samples from a single context. The model takes a raw audio signal as input and then applies an encoder network and a context network. The encoder network embeds the audio signal in a latent space, and the context network combines multiple time-steps of the encoder to obtain representations that have been contextualized. The objective function is then computed from both networks. Layers in the encoder and context networks are made up of a causal convolution with 512 channels, a group normalization layer, and a ReLU nonlinearity activation function. The representations produced by the context network during training are fed to the acoustic model. Training and evaluation of acoustic models are done using the wav2letter++ toolkit. For decoding, a lexicon and a separate language model trained on the WSJ language modeling dataset are used.

Wav2Vec 2.0: Self-Supervised Learning of Speech Representations

For the first time, the authors of this paper show that, learning powerful representations from speech audio alone followed by fine-tuning on transcribed speech can outperform the best semi-supervised methods while being conceptually simpler. Wav2vec 2.0 masks the speech input in the latent space and solves a contrastive task defined over a quantization of the latent representations which are jointly learned. Experiments using all labeled data of LibriSpeech achieve 1.8/3.3 WER on the clean/other test sets. When lowering the amount of labeled data to one hour, wav2vec 2.0 outperforms the previous state of the art on the 100 hour subset while using 100 times less labeled data. Using just ten minutes of labeled data and pre-training on 53k hours of unlabeled data still achieves 4.8/8.2 WER. This demonstrates the feasibility of speech recognition with limited amounts of labeled data.

Speech Recognition APIs

Google Cloud Speech API: It is a part of Google Cloud infrastructure. It allows converting human speech into text. This API supports more than 110 languages. The system supports customization in the form of providing the list of possible words to be recognized. API can work both in batch and real-time modes. It is stable against side noises in the audio. For some languages the filter for inappropriate words is available. The system is built using deep neural networks and can be improved over time. The files you want to process can be directly fed to the API or be stored on Google Cloud Storage. The price is flexible. Up to 60 minutes of the processed audio is free for each user. If you want to process more than 60 minutes, you should pay 0.006 USD per 15 seconds. Interestingly, the total monthly capacity is limited to 1 million minutes of audio.

IBM Watson Speech to Text: The IBM Watson Speech to Text API empowers you to translate audio (any form of speech data) into written text so that you can include accurate voice recognition capabilities in your work environment. This speech recognition service is versatile and robust. The API allows you to automatically convert audio in real-time, build voice-controlled applications, and customize the speech recognition model to suit your content and language preferences. You can also use the API for a wide range of use cases such as transcribing audio from a microphone, transcribing call center recordings, or analyzing audio recordings using keywords. The IBM Watson API supports seven languages. The IBM Watson Speech to Text API has a free plan that allows you to transcribe 100 minutes per month. For more extensive usage, it has different pricing tiers, which start from $0.02 per minute (for up to 250,000 minutes) to $0.01 per minute (for more than one million minutes).

Microsoft Azure Speech API: It quickly and accurately transcribes audio to text in more than 85 languages and variants. You can customize models to enhance accuracy for domain-specific terminology. You can get more value from spoken audio by enabling search or analytics on transcribed text or facilitating action. It is possible to add specific words to your base vocabulary or build your own speech-to-text models and run Speech to Text anywhere — in the cloud or at the edge in containers. You can access the same robust technology that powers speech recognition across Microsoft products! The free plan consists of 5 audio hours/month with standard and custom features. The paid plan costs $1 per audio hour with standard features and $1.40 per audio hour with custom features and additional pricing for endpoint hosting.

Amazon Transcribe: It is a part of the Amazon Web Services infrastructure. You can analyze your audio documents stored in the Amazon S3 service and get the text made from the audio. Amazon Transcribe can add punctuation and text formatting. Another valuable function provided by this service is the support for telephony audio. It is because the audio from the phone conversations often has low quality. So, the developers of Amazon Transcribe considered, that they have to process this type of audio in a specific way. The system adds timestamps for each word in the text. So, you will be able to match each word in the text to the corresponding place in the audio file. It is expected that the API will be able to recognize multiple speakers and label their voices in the text soon. There is a Free Tier in pricing: you can use the service for free during the first 12 months after registration (max 60 minutes of audio per month). After this period, you will need to pay 0.0004 USD per second of processed audio.

Rev.ai API: The Rev.ai API allows developers to access a robust speech recognition system and build speech-to-text capabilities into their applications. Rev.ai API is a very capable speech recognition service. With the rev.ai API, you can quickly and accurately convert human voice to text transcriptions and do more with your audio and video content. The speech recognition service comes with a wide range of amazing features, including support for punctuation and capitalization, timestamp generation, the ability to recognize multiple speakers and attribute text to each, and the ability to transcribe speech to text during live streaming. There is a free file-duration-per-fifteen-seconds quota of 240 per month. Thereafter, it is charged at $0.000875 each.

Wit API: The Wit API provides natural language processing and voice interface capabilities, which you can use to create applications and devices that can interpret users’ speech. With the Wit API, you can include a state-of-the-art natural language interface to your application so that users can simply talk to express their intent, instead of following complicated steps or clicking many buttons. For example, you can use the API to create voice-controlled commands, robot dialog interfaces, and Siri-style personal assistants. The API supports a limited number of languages but it is provided for free.

Speech Recognition APIs and Custom Training Benchmarking

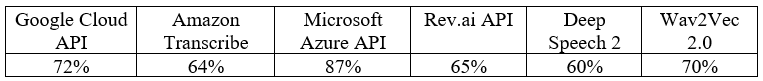

The benchmarking is done for this video using different APIs and custom-trained models. First, the video file is converted into an audio file and transcribed through APIs (e.g., Google, AWS, Microsoft Azure, etc.). Second, the audio file is transcribed by a human transcriptionist — to approximately 100% accuracy. Finally, the API’s transcription and the human transcription is compared to calculate Word Error Rate (WER).

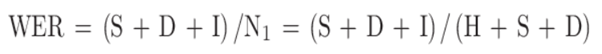

WER Methodology: If a word in the reference sequence is transcribed as a different word, it is called substitution. When a word is completely missing in the automatic transcription, it is characterized as deletion. The appearance of a word in the transcription that has no correspondence in the reference word sequence is called insertion. The performance accuracy of a system is usually rated by the word error rate (WER), a popular ASR comparison index. It expresses the distance between the word sequence that produces an ASR and the reference series. It is defined as:

Mathematical Formula for calculating Word Error Rate (WER)

Where, I = the total number of entries, D = total number of deletions, S = total number of replacements, H = total number of successes, and N1 = the total number of reference words. Despite being the most commonly used, WER has some cons. It is not an actual percentage because it has no upper bound. When S = D = 0 and we have two insertions for each input word, then I = N1 (namely when the length of the results is higher than the number of words in the prompt), which means WER = 200%. Therefore, it does not tell how good a system is, but only that one is better than another. Moreover, in noisy conditions, WER could exceed 100%, as it gives far more weight to insertions than to deletions. All the accuracy scores are approximated to their nearest percentage.

Accuracy Results:

Accuracy scores obtained when the video mentioned above is sent through different models

Conclusion

End-to-end deep learning presents the exciting opportunity to improve speech recognition systems continually with increases in data and computation. Popular Speech Recognition APIs like Google Cloud, Microsoft Azure, etc. are available if you do not have access to resources like GPU cluster and want to get the transcription for your requirement in the least expensive way. However, in the long run, it is always better to train your own Speech Recognition model using the data and customizations that fits your purpose.

References

[1] https://www.ibm.com/cloud/learn/speech-recognition

[2] https://en.wikipedia.org/wiki/Speech_recognition

[3] https://cloud.google.com/speech-to-text#all-features

[4] https://www.kdnuggets.com/2018/12/activewizards-comparison-speech-processing-apis.html

[5] https://heartbeat.fritz.ai/the-3-deep-learning-frameworks-for-end-to-end-speech-recognition-that-power-your-devices-37b891ddc380

[6] https://heartbeat.fritz.ai/a-2019-guide-for-automatic-speech-recognition-f1e1129a141c

[7] https://blog.api.rakuten.net/top-10-best-speech-recognition-apis-google-speech-ibm-watson-speechapi-and-others/

[8] https://www.assemblyai.com/benchmark

[9] https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7256403/

[10] Amodei, Dario et al. “Deep Speech 2 : End-to-End Speech Recognition in English and Mandarin.” ArXiv abs/1512.02595 (2016).

[11] Baevski, A., Zhou, H., Mohamed, A., & Auli, M. (2020). wav2vec 2.0: A Framework for Self-Supervised Learning of Speech Representations. ArXiv, abs/2006.11477.