Let us understand why we need NLP?

As we know data is being generated when we speak when we send messages and in various other activities. Mainly these data are in the textual form, which is highly unstructured. the information presents in these data is not directly accessible unless it is read and understood manually. But If we want to analyze several hundred, thousands, or millions of text data then it needs a lot of manual effort or even it seems very difficult to produce insights from large text data. this is where we need Natural Language Processing (NLP) to process large amounts of data.

What is natural language processing?

Natural language processing (NLP) is the ability of a computer program to understand, analyze, manipulate, and potentially generate human language.

In simple terms, NLP represents the automatic handling of natural human languages like speech or text.

Different components of Basic NLP are as follows:

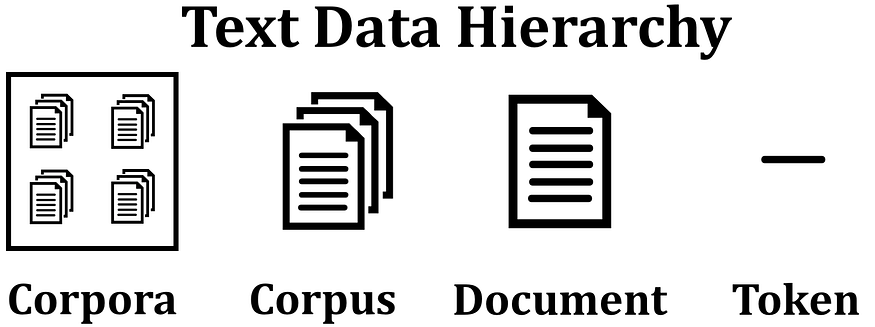

Corpus

A Corpus is defined as a collection of text documents for example a data set containing tweets containing Twitter data is a corpus. So corpus consists of documents, documents consist of paragraphs, paragraphs consist of sentences and sentences consist of further smaller units which are called Tokens.

Tokens

Tokens are the building blocks of Natural Language. Tokens can be words, phrases, or N-grams.

For example, if A sentence of 10 words, then, would contain 10 tokens. In the sentence “Diatoz is the best company” the tokens are: “Diatoz”, “is”, “the”,” best”, “company”.

N-grams

N-grams are defined as the group of n words together. For example, consider this given sentence- “Diatoz is the best company.” In this sentence “Diatoz”, “is”, “the”, “best”, “company” are uni-grams(n=1). “Diatoz is”, “is the”, “the best”, “ best company” are Di-grams(n=2). “Diatoz is the”, “the best company” are tri-grams(n=3). So, uni-grams consist of one word, di-grams consist of two words together and tri-grams consists of three words together.

Tokenization

Tokenization is a process of splitting a text object into smaller units which are also called tokens. The most commonly used tokenization process is White-space Tokenization in this complete text is split into words by splitting them from white spaces. For example, in a sentence- “Diatoz is the best company” white-space Tokenization tokenization will generate “Diatoz”, “is”, “the”, “best”,” company”. We can also tokenize the sentences by using a regular expression pattern which is known as Regular Expression Tokenization. For example Sentence= “Diatoz, Infosys; Tech Mahindra” re.split(r’[;,\s]’, Sentence The above pattern will tokenize based on delimiters such as comma, semi-colons, and white space. And for the above sentence, it will produce the following tokens- “Diatoz”, ”Infosys”, “Tech”, “Mahindra”

Stemming

Stemming is a rule-based process for removing the end or the beginning of words with the intention of removing affixes. and the outputs will be the stem of the world.

For example, “laughing”, “laugh”, “laughed“, “laughs” will all become “laugh”, which is their stem, as suffix will be removed.

But there are some limitations. The stemming process may not produce an actual word or even it may produce a word that has a different meaning.

Lemmatization

lemmatization returns an actual word of the language, It makes use of vocabulary, word structure, part of speech tags, and grammar relations. it is used where it is necessary to get valid words The output of lemmatization is the root word called a lemma.

For example, the words “running”, “runs” and “ran” are all forms of the word “run”, so “run” is the lemma of all the words.

Lemmatization resolved the issue of stemming.

As lemmatization is a systematic process while performing lemmatization we can specify the part of the speech tag for the required term and lemmatization will only be performed if the given word has the proper POS tag. Suppose we try to lemmatize the word running as a verb, it will be converted to run. But if we try to lemmatize the same word running as a noun it won’t be converted.

End Notes

In this article, we went through the basics of Natural Language Processing.

Let us know in the comments below if you have any doubt with respect to this article.